Innovating Curriculum Review with Large Language Models

July 17, 2024

The world of higher education is evolving, and technology has played a big role in pushing it forward. Artificial Intelligence (AI) is becoming more and more integrated into every industry, and higher education is no different. One exciting development is the use of AI to make curriculum evaluation more efficient and insightful.

Schools are always looking for ways to continuously improve, and AI tools like Large Language Models (LLMs) are proving to be incredibly helpful.

This week on the Holistic Success Show, we welcome Dr. Deborah Chang, Director of Curriculum Evaluation at the Joe R. and Theresa Lozano Long School of Medicine at the University of Texas Health, San Antonio.

In this episode, we explore how Dr. Chang and her team are leveraging AI and Large Language Models (LLMs) to transform their curriculum review process.

What are Large Language Models?

Large Language Models (LLMs) are advanced AI systems trained on vast amounts of data to understand and generate human-like text. They utilize deep learning techniques and transformer architectures to perform a wide range of tasks, from text generation, summarization, language translation, sentiment analysis.

By leveraging billions of parameters, LLMs can provide contextually relevant and coherent responses, transforming industries such as customer service, content creation, and research.

Why use LLMs in curriculum review?

The adoption of LLMs can be immensely helpful for teams looking to enhance the way they collect feedback and review their programs. Most schools gather both quantitative and qualitative feedback regularly, amounting to a substantial amount of data. This is a lot to review!

Over time, the manual feedback process can become increasingly challenging, and many teams begin looking to AI to increase efficiency in the evaluation process.

What challenges do teams face with traditional methods of curriculum review?

Traditional methods involve manually reading and coding narrative feedback, which is time-consuming and unsustainable given the volume of data.

Teams often send raw data reports to course directors and committee members, hoping they can interpret the information effectively. The pressure to provide timely and useful feedback is a constant challenge, highlighting the need for a more efficient system.

How effective are LLMs?

When Dr. Chang and her team began to implement LLMs, their model was tested on three levels: curriculum review reports, weekly feedback, and reflection meetings. The results were promising.

The reports generated by LLMs not only matched the manually identified themes but also provided more detailed insights and specific examples. This efficiency allowed for quicker turnaround times and more comprehensive feedback, which was well-received by the faculty.

The AI tools significantly reduced the workload, enabling the team to focus on providing valuable context and guidance.

Are there other areas where AI can be applied?

The potential for real-time use of AI during meetings is a promising area for further exploration. The ability to quickly analyze raw data and provide immediate insights during discussions could greatly enhance decision-making processes. Integrating AI into more aspects of the evaluation process could further streamline workflows and improve the overall quality of feedback.

What benefits were discovered from using LLMs?

The use of LLMs has brought numerous benefits to the curriculum evaluation process.

One significant advantage is the ability to synthesize large amounts of data quickly, resulting in more detailed and timely reports. Faculty members and committee members now receive summaries that are not only thorough but also actionable. This enhancement has improved the continuous quality improvement processes, ensuring that feedback is effectively utilized to enhance the curriculum.

Moreover, the validation provided by the AI-generated reports has boosted confidence in the findings. Knowing the AI’s analysis aligns with human-generated themes reassures faculty members of the insights’ accuracy and reliability. This validation has encouraged a more open-minded approach to integrating AI tools in other areas of curriculum management.

Key takeaway

Integrating Large Language Models at the Joe R. and Theresa Lozano Long School of Medicine shows how AI can transform curriculum evaluation. Dr. Deborah Chang and her team have proven that starting with small AI projects and expanding them can significantly improve feedback processes. These changes have made their work more efficient, provided detailed insights, and delivered timely feedback, making it easier for faculty to make informed decisions.

This success story highlights the potential of AI in education. By thoughtfully implementing AI tools like LLMs, schools can better manage and analyze data, leading to improved educational outcomes. Dr. Chang’s experience is a great example for other schools looking to innovate, showing that AI can enhance curriculum management and overall quality.

Subscribe to the Holistic Success Show to hear more inspiring stories and innovative approaches in the world of education.

Related Articles

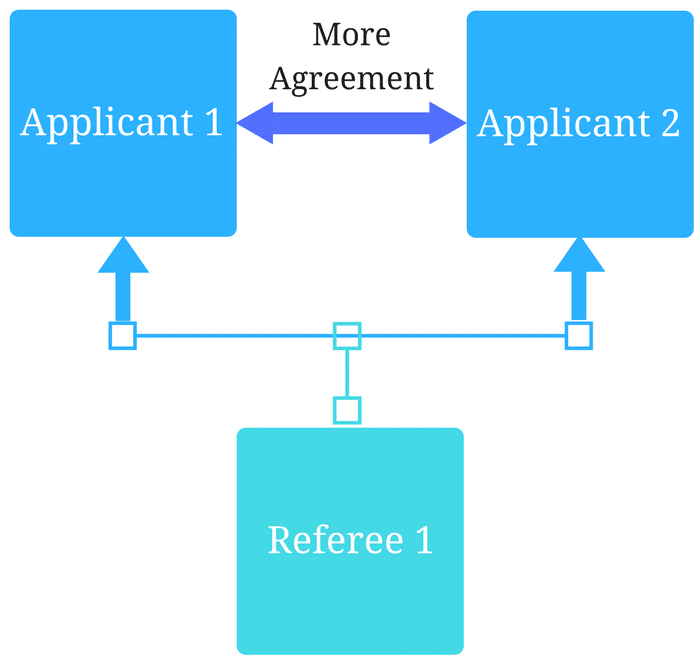

How interviews could be misleading your admissions...

Most schools consider the interview an important portion of their admissions process, hence a considerable…

Reference letters in academic admissions: useful o...

Because of the lack of innovation, there are often few opportunities to examine current legacy…